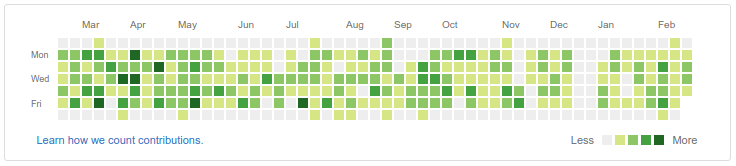

I just finished my tenth full year as a software professional, and I’m starting my third year with Code.org. One of the perks of this job is that all of my work is public on GitHub. So let’s put it under a microscope!

Stats

I like numbers, so we’ll start with that – a nice, ten-thousand-foot view. All stats in this section are for dates 2016-02-20..2017-02-15 (because we gave peer feedback in February).

I merged 538 pull requests this year, up from 410 last year. 491 (91%) of those were within the code-dot-org organization.

- 460 on code-dot-org/code-dot-org

- 17 on code-dot-org/p5.play

- 9 on code-dot-org/piskel

- 3 on code-dot-org/react-color

- 1 on code-dot-org/reactcss

- 1 on code-dot-org/playground-io

- 47 outside of code-dot-org (though the vast majority are work-related upstream changes)

I made 1,487 commits on code-dot-org/code-dot-org, ranking #1 by commit count to that repository. That’s actually down from 1,748 last year, lowering my average commits per pull request from 4.26 to 3.23 c/pr.

I changed +117,594 / -126,514 lines on code-dot-org/code-dot-org, ranking #6/#7 by lines changed. Though often a misleading statistic (you get a lot of credit for checking in generated content or extracting libraries) I’m happy that I removed more code than I added this year. This is also down from +277,976 / -231,200 last year.

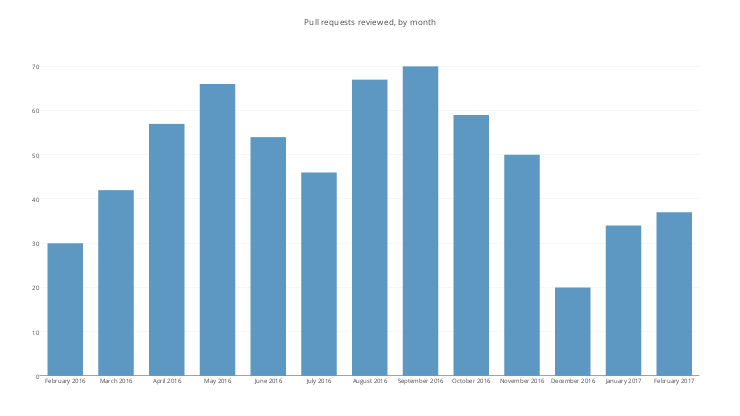

I participated in 823 pull requests by other people, up significantly from 298 last year. Counting code reviews is tricky, especially since GitHub rolled out review requests in December. We can infer some things though.

- I commented on 768 pull requests.

- Of those I commented on, 605 were assigned to me, up from just 128 last year. This is probably the best estimate of “code reviews” though I can think of cases where it doesn’t fit.

- GitHub only identifies 259 pull requests as reviewed by me.

That’s about 2.5 reviews per day, on average (605 reviews / 247 working days) up from 0.52 last year. I think it’s also notable that I reviewed more PRs than I merged this year. This suggests a huge change in my role on the team, and I’ve felt it.

I contributed to at least 20 repositories in my work at Code.org this year, where contribution includes reporting issues and participating in discussion, in addition to submitting code and documentation changes. 14 of these were outside the code-dot-org organization.

Features

Of course, it’s important to look at meaningful contributions in addition to raw numbers. Here are some changes I’m proud of this year.

Game Lab

I spent most of my year on a tool called Game Lab that’s used in our new CS Discoveries course for grades 6-9. Game Lab is an online JavaScript IDE (using droplet and similar to App Lab) with a built-in game engine (p5.play) and animation editor (Piskel). The tool is still in beta but it’s already a lot of fun to play with – I’m quite proud of Tennis Jump.

I did a lot of work on the p5.play engine this year, including lots of new test coverage and a collision system overhaul that hasn’t been accepted upstream yet but we are using on our fork.

I integrated Piskel into Game Lab, which involved loading it in an iframe within our React-rendered UI and writing a nice API that Game Lab can use to communicate with it.

I designed and built an S3-backed Animation Library for Game Lab that enables fast keyword search, is easy to update and propagates library changes across environments as we deploy new code.

Internet Simulator

The Internet Simulator was my biggest project in 2015. It’s a real-time online multiplayer network simulation tool, used in our CS Principles course to explore everything from binary encoding and the shared wire problem to routing and DNS.

The ‘multiplayer’ part of the simulator gives it some unique challenges among the tools on our site, especially when it comes to scaling up. I did some careful projections for Fall 2016, and we managed to scale simulator traffic up about 550% over our peak pilot traffic in Fall 2015 without issue.

Public Key Cryptography Widget

I implemented a redesigned version of our Public Key Cryptography widget. It also gets used in CS Principles. This was a fun bit of greenfield React work that you can see here on GitHub.

Testing improvements

I separated our JavaScript tests into a unit test suite and integration test suite. This had major benefits for iteration speed and presented a cleaner interface for running tests.

I built a progress page for our UI tests and started keeping build logs and test output on S3. Before we were scanning a Slack room to see whether tests were done and what had failed.

I helped the team improve Circle CI test stability from less than 50% reliable to more than 95% reliable. We have a separate acceptance test environment that catches things before we ship, but making Circle CI run usefully on all of our pull requests catches problems early has a big positive impact on team productivity.

My main contributions to this effort were presenting a weekly report of stability metrics and top actionable issues, numerous fixes, a generalized build flag system, and even a fix to Applitools.

Technical debt

I overhauled our main README. It used to be much harder to scan. People seem happy with my changes – very little has been done with it since.

I set up a way to share color constants between Ruby and JavaScript. We probably should have done this a long time ago. Since then the team has found ways to share other code as well.

I kept lots of JavaScript dependencies up-to-date. React, React again, webpack, lodash, lodash again, React yet again, react-color, pusher-js…

I gave our apps a top-level React wrapper. A year ago, we had been using React and Redux in isolated pockets but hadn’t yet made it a major paradigm across our application. In Feburary-March 2016 I upgraded React to the latest stable version and gave all of our apps a top-level React wrapper so we could start building from the outside-in (the way React prefers to be used).This was a large change across several PRs that was groundwork for a lot of our new development this year, and I think has had a clear positive impact (7000, 7026, 7051, 7063, 7083, 7148, 7301, 7328).

Strengths

Looking back on the year and reviewing my peer feedback, here are some things I did well.

Code craftsmanship. This was the most common theme in my peer feedback, showing up in terms like “technical depth,” “clarify,” “methodical,” “architecture,” and “cares about creative and interesting solutions.” I care about this a lot and see it as my most natural talent as a software developer.

Pull request descriptions. My pull requests are the best documentation of my changes and the reasons for them, and I regularly refer back to them. If we ranked PR description quality, I suspect I sit near the top of the team, on a team that (in my experience) is already above average among open-source projects when it comes to documenting changes. My peers also called this out as one of my strengths. Examples: My last three closed PRs (13312, 13286, 13252), or this one from nearly a year ago.

Professionalism. Noted by several of my peers. Outside of pull requests, I do pretty well at other documentation (work on our READMEs, tech specs, etc) and at surfacing my progress to interested parties. I’m willing to jump into any part of our project and try to pick up tasks that nobody wants to do. I try to be available to answer questions at all times and I likewise lean on the team and ask questions when I am blocked. I express passion for our work.

Planning, projections and scaling. This is a new strength for me this year – not necessarily estimation of how long work will take (I’m still pretty bad at that) but of what sort of traffic we expect and can maintain. The planning and projections we did for Internet Simulator were extremely useful. I’ve learned a ton about what goes into handling lots of traffic this year.

Automation and tooling. Another new strength. I’ve done quite a bit of internal support work this year: Building better dev team tools, upgrading libraries and cleaning up the way we use dependencies, making our tests run better, trying to get things out of our collective brain-cache. Apparently my peers also reported this.

Team Influence. A third new strength. A recurring theme in feedback was appreciation of my work on test stability, especially the weekly reports. My manager called out that instead of coming across as a complaint or a name-and-shame session, I managed to be encouraging and help us make steady progress.

Goals

What will I work on next? This question keeps getting harder to answer, but here are some possibilities:

Measuring my defect rate. Looking back I have a suspicion that I introduced fewer bugs this year than I have in past years, but I realized I don’t have data to back that up. I need to learn how to measure this and start tracking it.

More consistent test coverage. This still isn’t 100% natural to me, and recent code coverage instrumentation has revealed that the Internet Simulator code is not covered as well as I thought (although we don’t get many bug reports about it anymore).

I need a new book. Reading I’ve done in the past has had a major impact on my practice, but I haven’t read a new software book in a while. Maybe something on software architecture?

Find a new multiplier on the team. I’ll keep looking for the next thing we can automate to keep development running smoothly. Maybe automating my weekly test stability reports, or lowering our test runtime, or getting us to true continuous deployment. Not sure exactly what it will be yet.

Brad,

After so many years when I start to know a bit code, and now that my wife becomes a programmer, I realized you are such a genius. I wish I know that when I was at school, but I barely know anything about code at that time, all I knew was that you and your wife are great people.

Thanks MZ! I hope you’re doing well!